Becky Gilbert, Jenni Rodd, and collaborators have a new paper out (as an online first article) in the Journal of Experimental Psychology: Learning, Memory, and Cognition. The details of the paper can be found below:

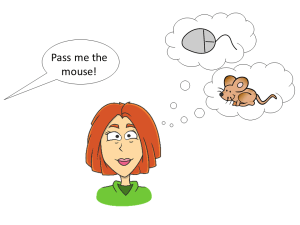

Title: Listeners and Readers Generalize Their Experience With Word Meanings Across Modalities.

Authors: Rebecca A. Gilbert, Matthew H. Davis, Gareth M. Gaskell, and Jennifer M. Rodd

Abstract:

Research has shown that adults’ lexical-semantic representations are surprisingly malleable. For instance, the interpretation of ambiguous words (e.g., bark) is influenced by experience such that recently encountered meanings become more readily available (Rodd et al., 2016, 2013). However, the mechanism underlying this word-meaning priming effect remains unclear, and competing accounts make different predictions about the extent to which information about word meanings that is gained within one modality (e.g., speech) is transferred to the other modality (e.g., reading) to aid comprehension. In two Web-based experiments, ambiguous target words were primed with either written or spoken sentences that biased their interpretation toward a subordinate meaning, or were unprimed. About 20 min after the prime exposure, interpretation of these target words was tested by presenting them in either written or spoken form, using word association (Experiment 1, N = 78) and speeded semantic relatedness decisions (Experiment 2, N = 181). Both experiments replicated the auditory unimodal priming effect shown previously (Rodd et al., 2016, 2013) and revealed significant cross-modal priming: primed meanings were retrieved more frequently and swiftly across all primed conditions compared with the unprimed baseline. Furthermore, there were no reliable differences in priming levels between unimodal and cross-modal prime-test conditions. These results indicate that recent experience with ambiguous word meanings can bias the reader’s or listener’s later interpretation of these words in a modality-general way. We identify possible loci of this effect within the context of models of long-term priming and ambiguity resolution.